Artificial intelligence, or AI as we more commonly refer to it, is old news. In fact, from the first publicly announced program on AI in the mid-1950s, nearly 70 years have passed. That’s about the same amount of time from the launch of the first satellite (Sputnik 1) to the release of the iPhone. While there have been occasional breakthroughs in AI over the years, until recently, it generally wasn’t dinner table conversation.

ChatGPT changed everything. Not because it was SO fundamentally different from its predecessors, but because it was free, accessible, and user friendly. It was the same reason the iPhone became the cell phone de facto standard; it had the brains and the looks.

Now, nearly every company on the planet is racing to capitalize on the new hotness. Suddenly, applications that data scientists and tech practitioners have been talking about for years are springing out of the woodwork. Image generation, text creation, videos, code, chatbots and smart assistants are just a few of the early innovations that have been demonstrated. In the realm of cybersecurity, an equal revolution is under way.

This blog post will focus on the industry of security, and some of the ways we at Exabeam envision this new wave of AI augmenting our customer experience, primarily in the security operations center (SOC). We’ll explore the growth of generative AI, as well as the potential for natural language processing (NLP) to apply to domains of security, including SIEM, and how different roles in a SOC could use features of AI.

In this article:

- The growth of generative AI

- Natural language processing

- Data onboarding

- Threat detection and tuning

- Threat explaining

- AI for different roles within a SOC

- Key takeaways

The growth of generative AI

At Exabeam, data science and machine learning have been at the heart of our products from the beginning. Modeling normal user behavior and deviations from it have allowed us to pinpoint anomalous users and activity, without using static signature-based detection. Generative AI, while related, is different in that it uses algorithms to create original content, such as images, videos, or text. This technology has already started to impact industries ranging from healthcare to entertainment.

For example, generative AI is being used to create synthetic images of patients for medical training purposes, while also being used to create realistic virtual environments for video games and movies. In technology, early applications have included automated website creation, code development and auditing, QA testing and even automating the entire software development lifecycle. The limits are boundless.

In the security industry, AI has massive disruption potential as well. For example, it has been used to create realistic phishing emails to train employees to recognize and avoid them. It has also been used to demonstrate the ability to create synthetic data such as malware for use in security testing. More recently, researchers have begun to hook tools like GPT-4 and ChatGPT to do end-to-end penetration testing.

By creating synthetic data that simulates real-world attacks, security teams can test their defenses against a wider range of scenarios without the risk of real attacks. (While there are equally concerning malicious applications of generative AI in phishing and other attacks, that’s a focus for a different conversation entirely.)

We at Exabeam have begun to explore generative AI using Google’s large language models (LLMs) to generate simple, but effective, technical threat summaries of singular or grouped detections. These same models may be able to answer important ad hoc user questions, like “What is this port used for?” or “What does this Windows event code mean?” Creating valuable context is one of the core strengths of a good generative AI system. We are also exploring simplifying the Search and query interface further by using NLP to generate complex queries using simplistic human questions. These capabilities can provide exceptional value into an organization, providing junior analysts and even upper management the ability to simplify complex threats and understand risk and business impact. Let’s delve deeper into NLP applications next.

Natural language processing

Natural language processing is a branch of AI that focuses on understanding and processing human language. It provides a mechanism to enrich the end user experience, by providing a translation framework between human language, both spoken and written, and complex computer systems. While early versions involved therapy chatbots and grammar applications, advances in NLP as a science have created opportunities for advancing ease of information manipulation.

A key application of NLP in security is in a search capability. By using NLP, security teams can search through large volumes of security data, such as logs, using common terminology and context that otherwise wouldn’t be possible. This allows them to quickly identify security incidents and respond to them more efficiently. Let’s look at an example that hits close to home.

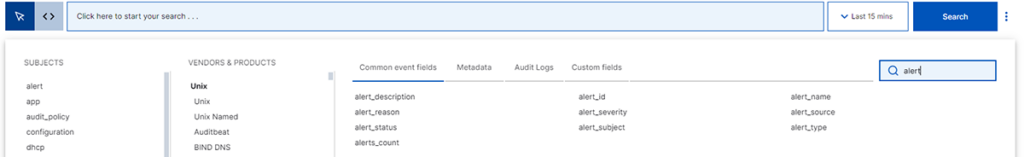

Today, in Exabeam Search, users can create queries using a simple wizard to select and autofill fields to retrieve parsed security logs and events.

More advanced users can write these queries directly, providing similar search functionality, but more granular specifications.

However, imagine that it is your first day on the job as an analyst in a small SOC. You haven’t yet been exposed to the product or provided basic training on how to use the search functionality. Furthermore, an hour after you start, the Operations team calls and says there’s been a network breach, detected by one of your Palo Alto Networks endpoints.

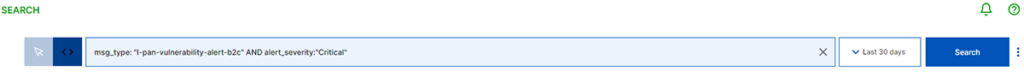

Instead of relying on traditional queries, NLP could be used to bridge the gap between user experience and the product’s capabilities. You could simply type:

“Show me critical event logs from my Palo Alto network appliances over the last 6 hours.”

NLP would be able to easily recognize the request in human-language context, translate it into an actual query, and execute it, returning the expected results. This is a simplistic example, but it highlights the effectiveness of adding NLP as a layer on top of existing search functionality. Roles ranging from analysts and threat hunters, all the way up to CISOs would be able to get answers to security questions that previously would have consumed person-hours.

We are also interested in the capability of NLP to create dashboards that provide real-time information about security incidents. While the Exabeam Dashboards feature already provides rich information and holistic context for security information, natural language integration for creation and tuning of these dashboards could dramatically improve the user experience.

Going back to our SOC analyst scenario, imagine being able to simply type:

“Create a CISO-level dashboard which effectively highlights events, users, and detections related to the malware detected by PAN yesterday.”

We are also focusing on three key areas of potential future AI and NLP capabilities:

- Data onboarding

- Detection tuning

- Threat explaining

Data onboarding

“It’s not who has the best algorithm that wins, it’s who has the most data.” This notable quote, attributed to Stanford University professor Andrew Ng, highlights the importance of collecting vast amounts of data for use in complex AI applications. However, it doesn’t tell the full story when it comes to onboarding data for use in features such as Exabeam Advanced Analytics.

Today, we use parsers to comb through terabytes of data, retrieving key nuggets of information such as users, machines, credentials, hosts and much more. These parsers, while invaluable to retrieving the event fields, are created by humans. Furthermore, even small changes in the logs being parsed or deviations from the standards can subject the parsers to missing key information.

This is an area where AI could have tremendous benefits; using machine learning techniques such as clustering logs by similarity, looking for outliers from an expected format, and identifying new logs would provide the ability to automate or assist in parser creation, testing, and validation. This could augment, or even potentially replace, the need for humans to create and implement effective parsers. It offers tremendous benefits to the security engineer role that is responsible for data onboarding, but would also pay huge dividends to the analysts and hunters to ensure they have clean data to search, detect, and respond to.

This data is parsed and “onboarded” through the Exabeam ingestion pipeline, where engines determine both normal and abnormal behavior, leading to investigation cases. Ultimately, these notable behaviors are investigated by SOC engineers and analysts. It should be easy to see just how critical the data onboarding process is to the end-user experience.

Threat detection and tuning

Detections are core to the ability of a SOC to discover and understand threats to their environment. Exabeam detections are based on security logic that is defined by correlation rules, anomaly rules, and third-party security products indicating suspicious or malicious activity. NLP crosses over into the field of detection tuning as well, where a SOC engineer could potentially leverage NLP for detection authoring, creating complex correlation rules, or detections without needing to know any query or aggregation logic.

Today, users or Exabeam researchers can author correlation rules manually, such as the following, which could identify an attacker who is attempting to disable the Windows Defender service:

activity_type = 'process-create' && toLower(process_name)='sc.exe' && contains(toLower(process_command_line), 'stop', 'windefend')

While this is a simple rule, imagine the power of being able to create the same content with a natural language statement, such as:

“Author a correlation rule which will detect the suspicious activity of disabling the Windows Defender service.”

This type of capability could have beneficial implications for each of the SOC roles from engineer to CISO, which we’ll discuss shortly.

Threat explaining

The final area of AI exploration for us is around triage, investigation, and response — ultimately combined with the term “threat explaining”. It’s one thing to be able to accurately detect and identify security anomalies, malicious or suspicious behavior, and the tactics, techniques, and procedures (TTPs) that accompany them. It becomes more complicated when you attempt to explain a threat holistically, which means combining multiple related detections over time and the chain of events that led to the outcome.

For example, an initial user’s credentials being compromised, logging into a laptop from a foreign country, escalating privileges, moving laterally to a critical asset, and exfiltrating data via a command-and-control server are all valuable individual detections, but tell a very different story when combined and explained as a related external attacker threat. A threat explainer capability could provide context that would otherwise need to be manually added, such as the nation-state attribution for a series of threats that resemble Fancy Bear (APT28) behavior, for example.

Generative AI and NLP could each have an impact in this area, providing the ability to explain threats in natural language, generate dynamic response playbooks for threats, and identify trends to drive tuning. While threat explanation is a core component of our product direction already, the additional layer of content creation leveraging AI is an area we are excited to investigate.

AI for different roles within a SOC

Different roles within a SOC, including security engineers, analysts, and CISOs, can all benefit from the features of AI. For security engineers, AI can help to automate routine tasks, such as patching and vulnerability scanning. This can free up their time to focus on more complex tasks, such as designing and implementing new security measures.

For security analysts, AI can help to identify security incidents more quickly and accurately. This can help them to respond to incidents more effectively and reduce the risk of successful attacks. AI can also help analysts to create more accurate detection rules, based on patterns identified in security logs.

Threat hunters can leverage the output from each of these to achieve higher fidelity detections, improved search capabilities and experience, and strong explanation of threats to improve the hunting capabilities.

SOC managers will be able to understand complex threats more easily, and use non-technical inputs via NLP to search, develop playbooks, and generate and interpret dashboards.

CISOs can benefit from AI by gaining a better understanding of their organization’s security posture. AI can provide real-time information about security incidents and vulnerabilities, allowing CISOs to make more informed decisions about where to focus their resources.

Key takeaways

AI is rapidly transforming the security industry, with generative AI and NLP leading the way. In the SOC, generative AI can be used to create meaningful and actionable security content conclusions, while NLP can be used to simplify and enhance the user experience for activities like searching, dashboarding, event correlation, and much more. Different roles within a SOC can all benefit from the features of AI, and as we continue to build out the new scale of threat detection, investigation, and response (TDIR) capabilities, we will be looking closely at each of these exciting capabilities to deliver world-class security products and experiences.

Want to learn more about the uses of AI and ML in security and IT?

Register for our upcoming webinar: What is the Future of AI in IT and Security?

It’s no secret that the shortage of cybersecurity professionals and experts poses a significant challenge.The buzz around Auto-GPT and security has sparked discussions about the potential of AI to fill the gaps and even enable organizations to build a fully computerized SOC. But is this a dream or a budding nightmare for organizations?

In this webinar, our Exabeam security experts will dive into the realm of computational efficiencies and operational efficiencies that automation brings. They’ll also provide valuable insight into the critical considerations, boundaries, and safeguards that organizations must establish when adopting AI and other emerging technologies.

You’ll gain a clear understanding of these topics and more:

- Assessing the risks associated with OpenAI and other NLP/LLM text searching interfaces

- Investigating the true potential of AI or ML in replacing human roles and responsibilities, and methodologies for evaluating AI systems

- Differentiating between ML and AI for chatbots in the context of security detection

- Demystifying the terminology associated with AI, including -machine learning, deep learning, natural language processing, and RNN

- Understanding the nuances between structured and unstructured data, as well as supervised and unsupervised approaches

Similar Posts

Recent Posts

Stay Informed

Subscribe today and we'll send our latest blog posts right to your inbox, so you can stay ahead of the cybercriminals and defend your organization.

See a world-class SIEM solution in action

Most reported breaches involved lost or stolen credentials. How can you keep pace?

Exabeam delivers SOC teams industry-leading analytics, patented anomaly detection, and Smart Timelines to help teams pinpoint the actions that lead to exploits.

Whether you need a SIEM replacement, a legacy SIEM modernization with XDR, Exabeam offers advanced, modular, and cloud-delivered TDIR.

Get a demo today!