Back in the early days of 2020, the FBI came out with a disturbing warning. It cautioned that deepfake technology — the ability to generate highly realistic images, video, or audio — was already able to dupe certain biometric tests.

Fast-forward to the present, and the ever-expanding capabilities of artificial intelligence (AI) can potentially pose profound challenges to the security operations center (SOC). Threat actors are already developing new tactics for overcoming and evading security measures, and more methods are likely to emerge.

In this article:

A new generation of threats

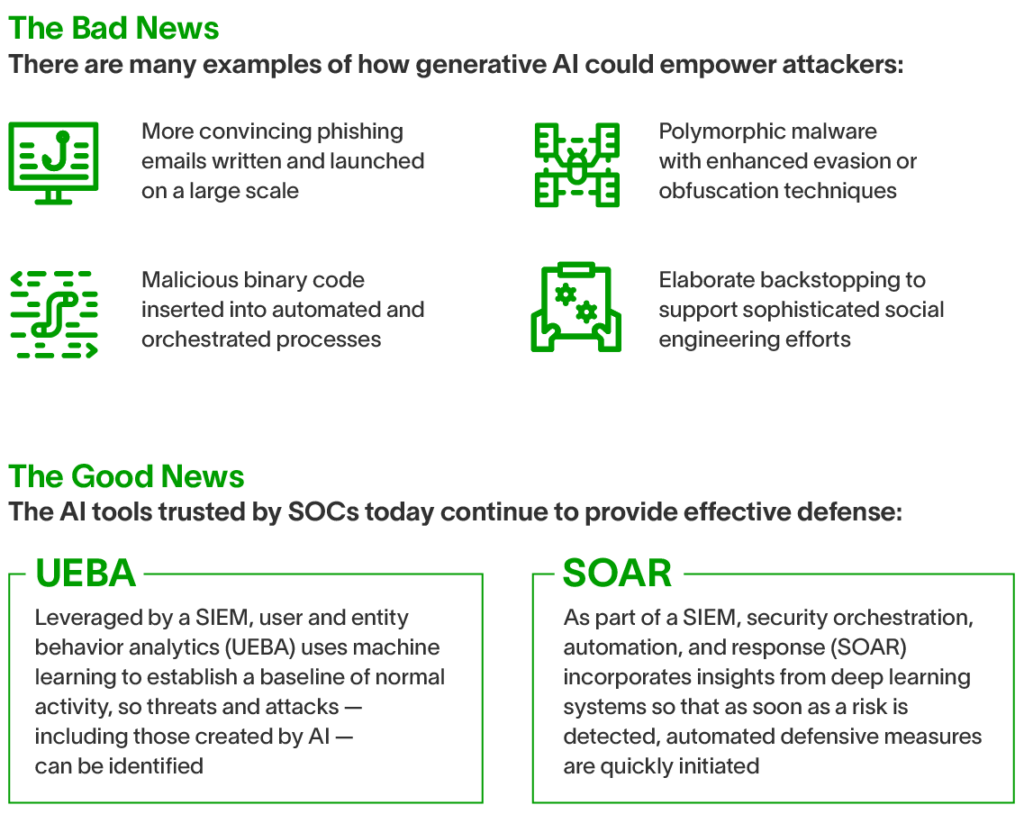

Beyond the ability to create deepfakes, generative AI can automatically produce text and write code, which can be used to undermine an organization’s defenses — sometimes in surprising ways. Consider these five scenarios.

1. Phishing on a whole new level

Cybercriminals have always known that human error is often an organization’s weakest link. Historically, phishing has been the most common type of social engineering — and in the age of generative AI, threat actors can automatically create more authentic-sounding messages that emulate a person’s specific writing style. They can also deploy them with far greater efficiency on a far grander scale.

2. Malware with a thousand faces

Analysts are already familiar with the problem of polymorphic malware — self-propagating and mutating code programmed to alter its shape and signature in an attempt to evade detection. In an age where AI is more responsive, reflexive, and adaptable than ever, it’s feasible — perhaps even inevitable — that the evasion and obfuscation techniques will become much more flexible and advanced.

3. The exploitation of orchestration

So many organizations have automated and orchestrated critical systems “as code,” meaning behind-the-scenes processes have been configured and coordinated to perform certain functions immediately when they receive the proper prompts. As AI develops, criminals will likely invent new ways to infiltrate and corrupt such systems. Of course, the SOC should be aware that generative AI is prone to make mistakes on its own, and as companies adopt new tools, new vulnerabilities may appear without any meddling from threat actors.

4. A multitude of malicious binaries

If generative AI can automatically write malicious binary code, it’s not a stretch to imagine a scenario where a criminal has an AI tool that produces multiple pieces of malicious code that all perform the same function. The first one may be caught and recorded in the library, but that would do nothing to stop the others. This should concern those SOCs that rely on signature-based rules or immature machine learning capabilities.

5. Constructing a labyrinth of lies

In the past, criminals have employed social engineering techniques such as “backstopping” to support their most highly targeted attacks, investing time and resources into building an elaborate smoke-and-mirrors network of fake people, products, and organizations that make their fraudulent façade look more legitimate. Now, the world is entering an era where such deception takes no time and could become considerably more common.

The race, and chase, is on

Security experts are actively researching how AI can be utilized for harm and devising ways to circumvent these kinds of attacks. But as any security leader knows, the resourcefulness of highly motivated cybercriminals should never be underestimated. Attackers and defenders will always seek new ways to outmaneuver each other in a relay that has been raging for decades.

As a pioneer in delivering AI to security operations, Exabeam wants you to understand the monumental shifts in today’s AI-driven landscape. Check out the CISO’s Guide to the AI Opportunity in Security Operations to learn more.

Want to learn more about AI in the SOC?

Read our white paper: CISO’s Guide to the AI Opportunity in Security Operations. This guide is your key to understanding the opportunity AI presents for security operations. In it, we provide:

- Clear AI definitions: We break down different types of AI technologies currently relevant to security operations.

- Positive and negative implications: Learn how AI can impact the SOC, including threat detection, investigation, and response (TDIR).

- Foundational systems and solutions: Gain insights into the technologies laying the groundwork for AI-augmented security operations.

Similar Posts

Recent Posts

Stay Informed

Subscribe today and we'll send our latest blog posts right to your inbox, so you can stay ahead of the cybercriminals and defend your organization.

See a world-class SIEM solution in action

Most reported breaches involved lost or stolen credentials. How can you keep pace?

Exabeam delivers SOC teams industry-leading analytics, patented anomaly detection, and Smart Timelines to help teams pinpoint the actions that lead to exploits.

Whether you need a SIEM replacement, a legacy SIEM modernization with XDR, Exabeam offers advanced, modular, and cloud-delivered TDIR.

Get a demo today!