Building Custom and Comprehensive Visibility and Security Enforcement for Generative AI

Authored by Steve Povolny, Stephen VanDyke, Alex Koshlich

At Exabeam, we’ve been talking a lot about artificial intelligence (AI) over the last year. Part of this is because it’s baked into our DNA, going back more than a decade, and deeply integrated into the heart of our products. However, the emergence of large language models (LLMs), coinciding with the staggering popularity of OpenAI’s ChatGPT, has developed at a pace no one saw coming. We’ve already capitalized on the newfound capabilities generative AI (GenAI) and LLMs provide — building, testing, and soon delivering unique features such as threat explanation and insights, natural language queries, and more. These are a natural progression of features that line up with the rich machine learning models and AI on which our technology is built.

Spiderman’s Uncle Ben said it best: “With great power comes great responsibility.” As we harness the power of GenAI, we have a unique responsibility to protect against misuse and abuse. This blog post takes a look at some of the ways Exabeam is internally practicing what we preach and helping our customers do the same.

In this article:

- Leveraging Industry Partners and Tools for Visibility and Detection

- DIY with the Exabeam Security Operations Platform

- Building Visualization and Security Detection Content for Enterprise and Consumer LLMs

- Detection Techniques: Correlation Rules vs. Machine Learning

- The Future of Generative AI Security

Leveraging Industry Partners and Tools for Visibility and Detection

The first step in security is visibility. It’s why we recently finalized the process of identifying a third-party platform that could augment our internal tools and product capabilities, while enhancing our unique visibility into the usage of GenAI tools. We decided to integrate with Nightfall AI, a startup focused on cloud DLP and data exfiltration solutions for AI tools. In the category of GenAI, Nightfall AI is initially focused on OpenAI, and supports both default and customizable policies for usage of ChatGPT.

Exabeam’s IT security team leverages alerts from Nightfall AI to identify things like:

- Passwords or secrets entered into a ChatGPT session

- Intellectual property entered into a ChatGPT session

- Personally identifiable information (PII) accidentally shared via ChatGPT prompts

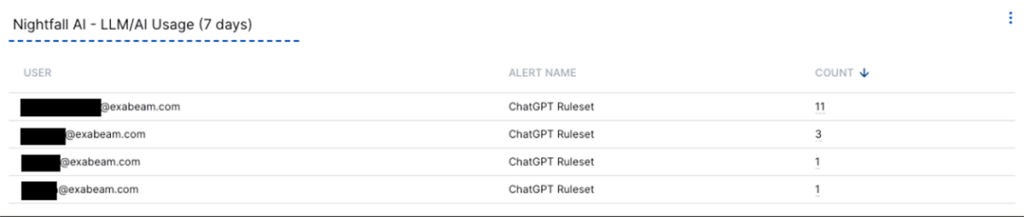

Using the custom detection policy, we also developed an alert that will trigger any time the word “Exabeam” is entered into a ChatGPT session prompt. When we integrated the resulting policy alerts with our internal Exabeam SIEM environment, we’ve not only been able to observe Nightfall AI results, but visualize usage and detections within Exabeam products. Furthermore, we can correlate user behavior, both malicious and benign, with Nightfall activity to achieve comprehensive insights into insider threat detection. As an example, the following dashboard highlights the count and type of Nightfall AI-generated alerts and the users in our network triggering these alerts.

Using correlation rules, we can go one step further and build detection content for these alerts, adding in rules such as, “John Doe uploaded a GitHub password into a ChatGPT session.”

Exabeam’s IT security department has created the following correlation rule leveraging Nightfall AI policies.

- Nightfall AI – LLM/AI Alerts

The rule is simple but effective — it triggers on any Nightfall AI alerts (such as those described earlier) defined in Exabeam’s environment, for employees using ChatGPT. This rule will be generally available in an upcoming build release and can serve as inspiration for developing similar content.

DIY with the Exabeam Security Operations Platform

Your imagination is your only limit here — when working with any vendor or product that generates alerts and corresponding logs for GenAI tools, the Exabeam Security Operations Platform can provide an additional layer of security detection and visibility. For Nightfall AI, we’ve also created a default parser for its alert logs which provides customers with access to relevant fields for dashboarding and correlation rule creation.

In addition to end-user interactions with GenAI platforms, Exabeam began monitoring fourth-party integrations to various AI platforms for both software as a service (SaaS) and endpoint tools. While our internal IT security team manages integrations with SaaS services in our corporate environment, we recognized the need to raise awareness that not every organization may have the same level of control. By monitoring these integrations, the Exabeam Security Operations Platform can proactively detect any unauthorized or potentially malicious activities related to AI platforms.

Another area of focus for us was monitoring AI-related plug-ins and extensions for endpoint applications. In order to track this information, we developed custom scripting that pulls plug-in details from various endpoint applications (i.e., Visual Studio Code, etc.). However, we soon realized that relying solely on this approach would require constant updates as new applications and their support for plug-ins emerge. To overcome this challenge, we turned to DNS and NetFlow logs from our endpoints to identify any connections to cloud-based AI services. This approach enabled us to leverage the existing monitoring dashboards we had previously configured, making it easier to detect any unauthorized or suspicious activities related to generative AI.

Building Visualization and Security Detection Content for Enterprise and Consumer LLMs

There’s no clear-cut distinction between a “Consumer” or “Enterprise” LLM per se, except perhaps in terms of licensing and usage constraints. However, we’ve identified five of the top LLMs available to consumers in terms of market share and accessibility, along with their domain.

Consumer/Public LLMs

- ChatGPT – chat.openai.com

- Bard – bard.google.com

- Cohere – dashboard.cohere.com

- HuggingFace – huggingface.co/vendor/model

- Claude – claude.ai/chats

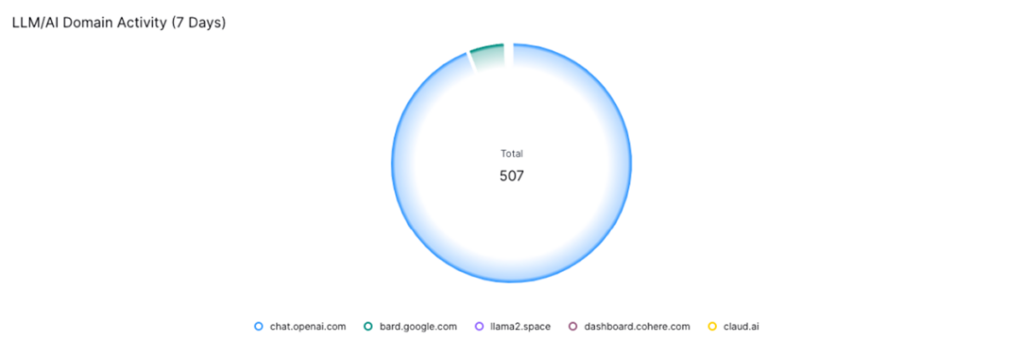

While widespread logs are not typically associated with these public LLMs, there are areas where we can effectively visualize a user’s access to these tools. For example, by leveraging site-based or cloud-based log collectors from third parties such as endpoint, network, proxy, and DLP solutions, we can determine whether a user visited one of these websites based on the domain and URL. While this may only be a small nugget of information, if we look at this behavior compared to a user’s normal interaction patterns with these LLMs, we can highlight scenarios that a SOC analyst may want to investigate.

CrowdStrike Endpoint DNS Log

{"DnsResponseType":"2","IP4Records":"redacted;redacted;", "ContextThreadId":"36373556770168","aip":"redacted", "CNAMERecords":"dashboard.netlifyglobalcdn.com;", "QueryStatus":"0","dproc":"FDR Items explorer","InterfaceIndex":"0","event_platform": "Win","DualRequest":"1","EventOrigin":"1","id": "7d78fccf-7b18-4088-aa1f-24120d3efa11","EffectiveTransmissionClass":"3", "FirstIP4Record":"redacted","timestamp":"1704832950810","event_simpleName":"DnsRequest","ContextTimeStamp":"1704832944.179", "ConfigStateHash":"1241739805","ContextProcessId": "1022844757047","DomainName":"dashboard.cohere.com", "destinationServiceName":"CrowdStrike","RespondingDnsServer": "192.168.0.1","ConfigBuild":"1007.3.0017706.1", "DnsRequestCount":"1","Entitlements":"15","name": "DnsRequestV4","aid: redacted”, cid":"redacted","RequestType":"28"}

Enterprise-level LLMs are more focused on volume licensing, targeting organizations looking for large-scale, automation projects with extended features and capabilities. They may include multiple different types of models, trained for specific purposes, and features like API access, access control lists (ACLs), volume-based pricing, and much more. We’ve listed several of the more popular enterprise LLMs as well.

Enterprise or Open Source GenAI Models and Frameworks

- Llama2 – Meta

- Bloom – HuggingFace

- Dolly 2.0 – Databricks

- VertexAI – Google

- MPT-30B – MosaicML

- GPT4 – OpenAI

- Claude – Anthropic

- Cohere – Cohere

For this case study, we’ll be leveraging Google’s VertexAI platform, which provides UI and API-based access to a number of models, including LLMs such as PaLM, SecPalm, Gemini, and more. Exabeam and Google have a strong partnership, and we’ve been leveraging their models to support many natural AI-based integrations into our product.

Exabeam has been parsing and building default detection content for Google Cloud Platform (GCP) logs for some time. Given that Vertex is built on top of GCP, our default parsers provide unique capabilities to develop customized visualizations and detection models for usage of the LLM tools, whether benign, malicious, or unintentional. We are in the process of building, testing, and releasing industry-first detection content providing unique visibility into the use and abuse of VertexAI, both infrastructure and models.

Detection Techniques: Correlation Rules vs. Machine Learning

The Exabeam Security Operations Platform has multiple detection methods available to be used in these scenarios. A correlation rule, most often associated with traditional SIEM, can be used for immediate detection for specific events, or a group of events. This type of rule can be used in situations where you know exactly what you are looking for and want to be notified when it happens. For example, you may have a policy in place in your organization that prohibits the use of any public LLM. In that case, a correlation rule looking for specific domain access could be used to provide immediate notification of policy violations.

To expand upon detections, Exabeam utilizes machine learning models which look for abnormalities to limit the number of false positive events. The Exabeam analytics engine builds models of user and asset activity based on the logs it receives. This allows for it to learn a baseline of normal activity to be defined over time, and, from that baseline, trigger any abnormal activity. This method of detection is useful when evaluating users or assets that normally conduct activity that may be malicious for another user or asset. An example of this would be developers who are working on projects that use LLMs. A correlation rule looking for specific activity may over trigger for these types of users. In contrast, by modeling the normal activity of these users, we can then look for increases in certain activity that may be an indicator of malicious activity, such as:

- First time this user has logged into VertexAI platform

- First time this user runs a “prediction” action for the PaLM model

- Administrative access granted to a new user for Vertex project

- First time anyone in the organization has run the SecPaLM security model

Again, the common theme here is that if you have the logs, an effective parser, and a good imagination, the opportunities to provide unique insight into your business’ GenAI and LLM-related risk posture is nearly limitless. Exabeam has always been a trailblazer in developing new model-based content for emerging threats, and this vertical is no different. We’d love to hear your ideas for valuable default content and visualization tools — whether you build it, or we do! Please submit your ideas and success stories to the Exabeam Community .

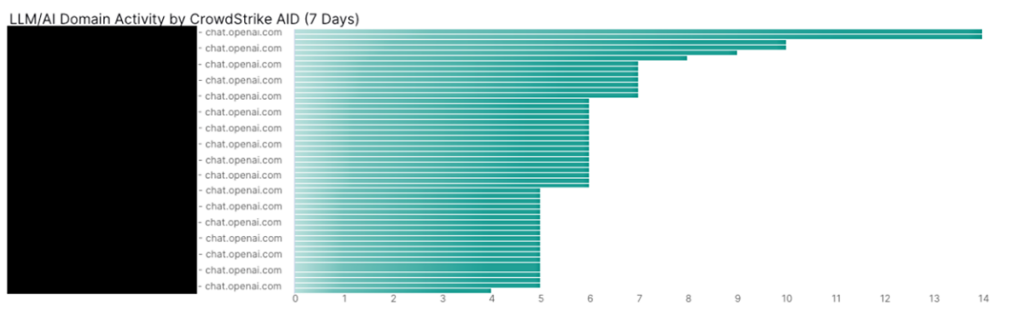

We offer a few illustrations below to provide some inspiration and motivation.

This dashboard above (Figure 3) shows a count of LLM/AI domains that were accessed per CrowdStrike AID (a unique ID referencing a user, which can be enriched with true usernames in the Exabeam Security Operations Platform). This is done in lieu of proxy logs to show which assets (both internal and remote) have accessed specific LLM/AI domains.

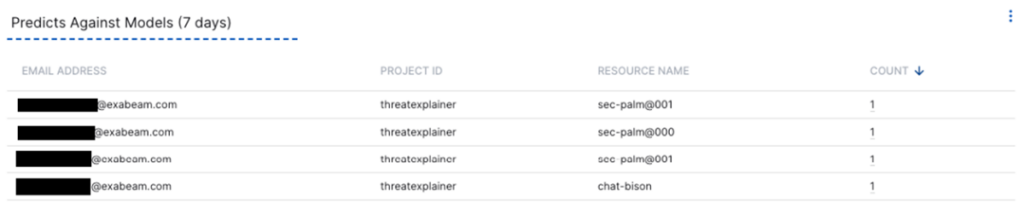

This dashboard above (Figure 4), shows the number of “predict” operations done against a specific model in a project in GCP. The “predict” operation is done when submitting a query to a model in GCP.

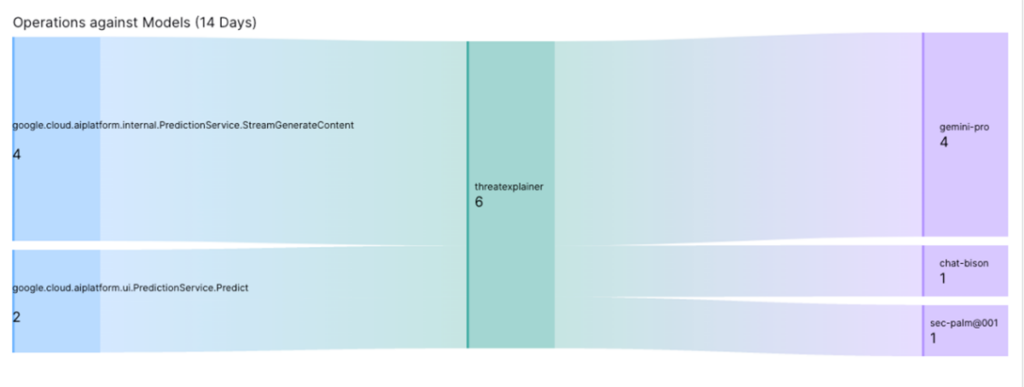

This dashboard above (Figure 5) shows the volume of operations done against models and the projects they are in. This is used to monitor activity inside your GCP infrastructure, specifically which operations are being done against models in projects.

The Future of Generative AI Security

As the world moves forward with exciting innovation and novel usage of GenAI technologies, we have a collective responsibility to share both the security concerns that come with the AI revolution, and the tools and techniques to visualize and defend against attacks, external and internal. We hope that this case study has provided some insight into Exabeam’s approach, as well as inspiration for how to leverage your existing tools and infrastructure to see what you couldn’t see before.

As GenAI inevitably evolves, whether it augments or displaces people, process, and technology, Exabeam will equally adapt to the changing landscape, adopt tools, and offer techniques to protect customers and advocate for a balanced approach to security and functionality. For more information on the benefits and risks of leveraging AI in security operations, read A CISO’s Guide to the AI Opportunity in Security Operations, or request a demo to see the AI-driven Exabeam Security Operations Platform in action.

Want to learn more about GenAI?

As business leaders and decision makers across various sectors embrace the potential of artificial intelligence (AI), they are confronted with critical questions:

- What are the potential dangers?

- Could AI be used against us?

- Do we have the right policies in place?

- How concerned should we be?

- Is our organization truly prepared?

Download A CISO’s Guide to the AI Opportunity in Security Operations to better understand the opportunity AI presents for security operations.

Similar Posts

Recent Posts

Stay Informed

Subscribe today and we'll send our latest blog posts right to your inbox, so you can stay ahead of the cybercriminals and defend your organization.

See a world-class SIEM solution in action

Most reported breaches involved lost or stolen credentials. How can you keep pace?

Exabeam delivers SOC teams industry-leading analytics, patented anomaly detection, and Smart Timelines to help teams pinpoint the actions that lead to exploits.

Whether you need a SIEM replacement, a legacy SIEM modernization with XDR, Exabeam offers advanced, modular, and cloud-delivered TDIR.

Get a demo today!