The annual cost of detecting and resolving insider threats averaged $15.38 million in the US, up 34% from 2020, according to the 2022 Ponemon Cost of Insider Threats Report. Large organizations often spend more than small ones to mitigate insider incidents, with negligence accounting for about two-thirds of the incidents. Careless employees or contractors are typically the worst offenders.

The Ponemon report also found that 26 percent of incidents actually involved a malicious insider with criminal intent, and 18 percent of those involved stolen credentials — nearly double since the last study.

The report goes on to explain that the average time to contain an incident is 85 days, an increase from 77 days in the previous study. The cost of containment rises in proportion to the amount of time required to contain an incident. Those that took more than 90 days to contain cost $17.19 million annually, whereas incidents that lasted less than 30 days cost organizations $11.23 million, on average.

Ponemon confirms what you likely already know: the frequency and cost of insider threats of all types is rapidly increasing.

Yet such a study exposes only known data regarding insider incidents. Within the 278 organizations surveyed, how many incidents went undetected? There is no way to know for sure, but frequently cyber threats and incidents go undetected and unreported — sometimes for years. What is known is that it’s likely your organization faces possible exposure to insider threats — and it’s probably larger than what you’ve anticipated.

In this article:

- Defining an insider

- What are common insider threat indicators?

- Conventional approaches to stop insider threats

- Using a behavior-based approach to proactively detect insider threats

- example

Defining an insider

Let’s first define the terms “insider” and “insider threat”, since there is no definitive agreement about what they mean. An insider has been legitimately empowered to access one or more of an organization’s network assets. Examples can include employees, contractors, vendors, partners, and ex-employees whose access has not been disabled. It can also include developers having backdoor access, and even members from mergers or acquisitions whose existing systems are being transitioned for inclusion in your network.

An insider threat is either an accidental or intentional act perpetrated against your organization’s assets by an insider or an outsider impersonating an insider. An insider incident is the loss resulting from one or more such threats.

While defining insider threat is important, what’s more important (critical even) is the recognition that these types of attacks represent the single largest threat to every organization. Compromised credentials, whether by insiders or external adversaries, are the holy grail for attackers. Why? Because traditional “rules, IOC, and signature-based” detections can’t help you against compromised credentials.

What are common insider threat indicators?

Let’s focus first on some of the more common insider threats. Here’s a list of some predictable behavioral indicators to watch for:

- Suspicious VPN activity — Connecting at unusual times from unusual locations or IP addresses

- Employees being notified of a layoff or other major negative event, affecting both those who are directly impacted and those remaining (but now with diminished morale)

- Departing employees who have just given their notice. It’s not common for a person to create new accounts to gain access before their credentials are revoked, often prior to giving their notice.

- Someone downloading substantial amounts of data to external drives, or using unauthorized external storage devices

- A person accessing confidential data that isn’t relevant to their role

- Emailing sensitive information to a personal account

- Someone attempting to bypass security controls

- A person requesting clearance or higher-level access without actual need

- A former employee maintaining access to sensitive data after termination

- Network crawling, data hoarding, or copying from internal repositories

- Deviations in a person’s normal working hours pattern

As you can see, insider threats aren’t just one event, but a series that ultimately leads to a threat to an organization. The key is to proactively identify such risky behaviors and patterns, then alert your security operations center (SOC) so it can monitor for possible insider threats.

Conventional approaches to stop insider threats

Frequently, many organizations focus on data loss prevention (DLP) in their attempt to stop unauthorized data transfer (data exfiltration). However, many DLP solutions produce results that are time and resource intensive. They typically require analysts to create individual rules for each policy and violation, triggering hundreds or possibly thousands of events every day. This makes uncovering actual threats or incidences very involved — like finding the proverbial needle in the haystack.

Another conventional insider threat detection issue is not being able to understand insider intent. As mentioned, most insider threats are caused by negligence — not malice. If you find this to be true within your organization, you might consider training as a corrective action, rather than taking punitive steps. But in order to take such appropriate action, you need to understand the intent.

Using a behavior-based approach to proactively detect insider threats

Your goal should be to stop insider threats before they become incidents. Unlike external threats, insider threats typically evolve over a long period of time. To discover them, you have to be able to monitor user behavior that isn’t within a normal range, such as copying and staging files for later exfiltration, or the creation of new accounts for future use.

“It is vital that organizations understand normal employee baseline behaviors and also ensure employees understand how they may be used as a conduit for others to obtain information.”

Combating the Insider Threat, US Department of Homeland Security

A necessary tool in identifying insider threat behavior is a user and entity behavior analytics (UEBA) solution that applies data science across all user and asset activities to determine a normal baseline of expected behaviors. Then when behavior drifts away from that baseline, the solution brings those users and/or assets to the attention of security analysts.

Exabeam UEBA takes a behavior-based approach by analyzing user behavior on networks, and applying advanced analytics to identify your riskiest users/assets based on their behavior/actions. It automatically analyzes relevant events from IT, productivity, and other security controls to calculate specific user and asset risk, making it easier to detect insider threats. Exabeam threat hunting capabilities allow analysts to then search for threats based on the identified behavioral artifacts and user context.

An intellectual property theft example

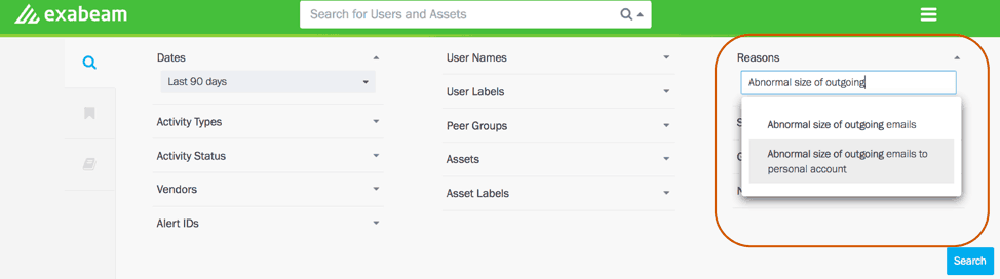

Let’s review a common scenario where an employee is leaving their job soon and is planning to steal intellectual property (IP). In the Exabeam dashboard, analysts can search for users that exhibit predictable malicious behavior by simply selecting from the risk reasons dropdown. This is one way to get the defaulted users and put them on a watch list. This is one example of the many different insider threat investigations that you can conduct.

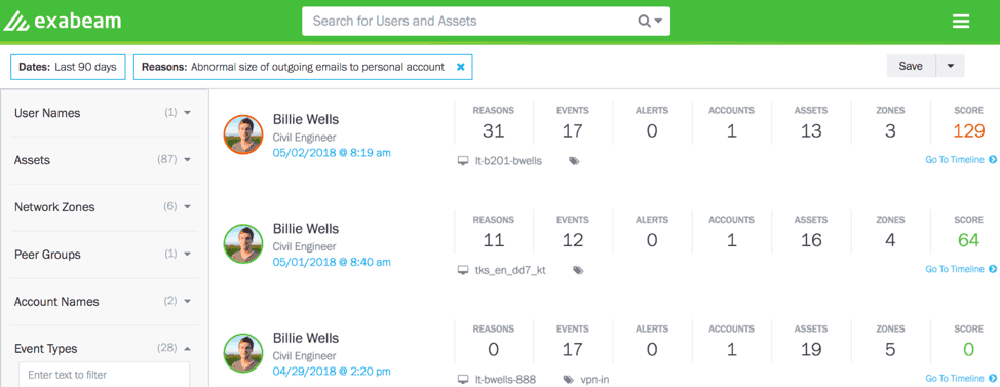

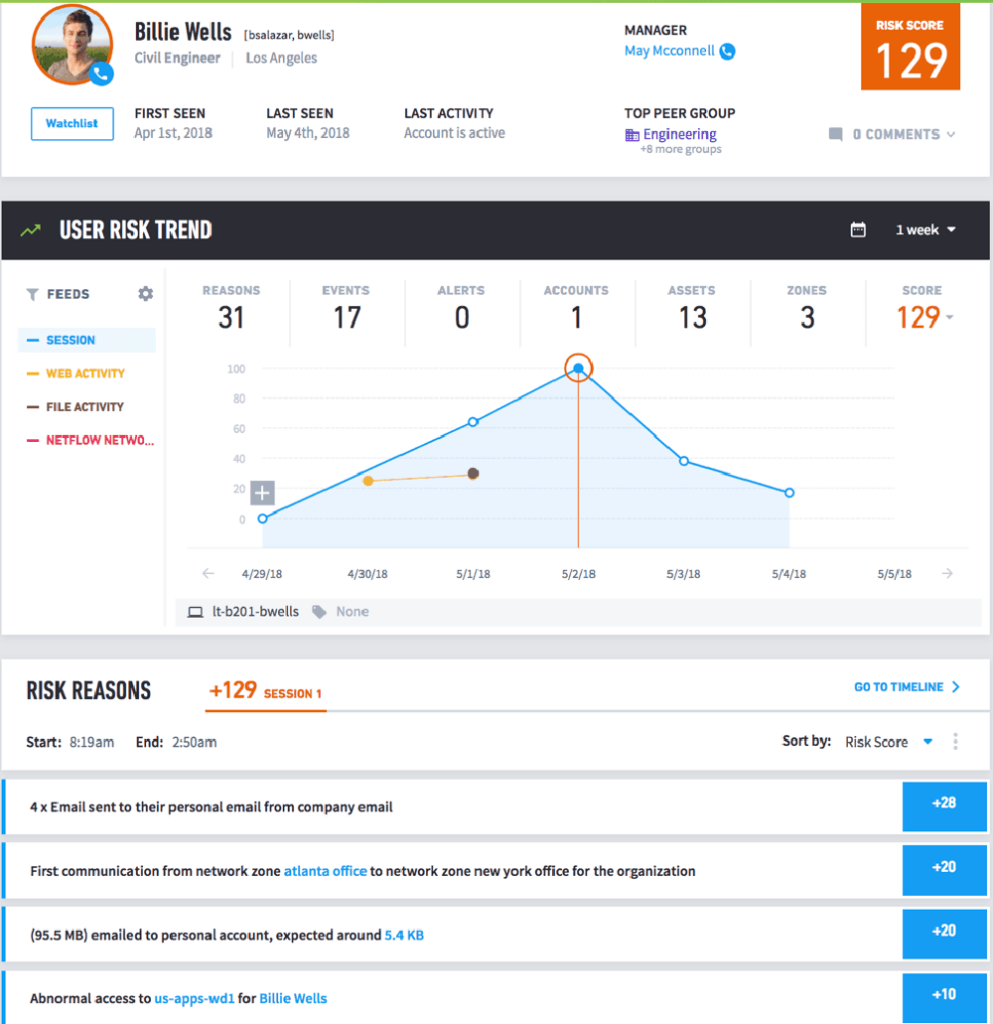

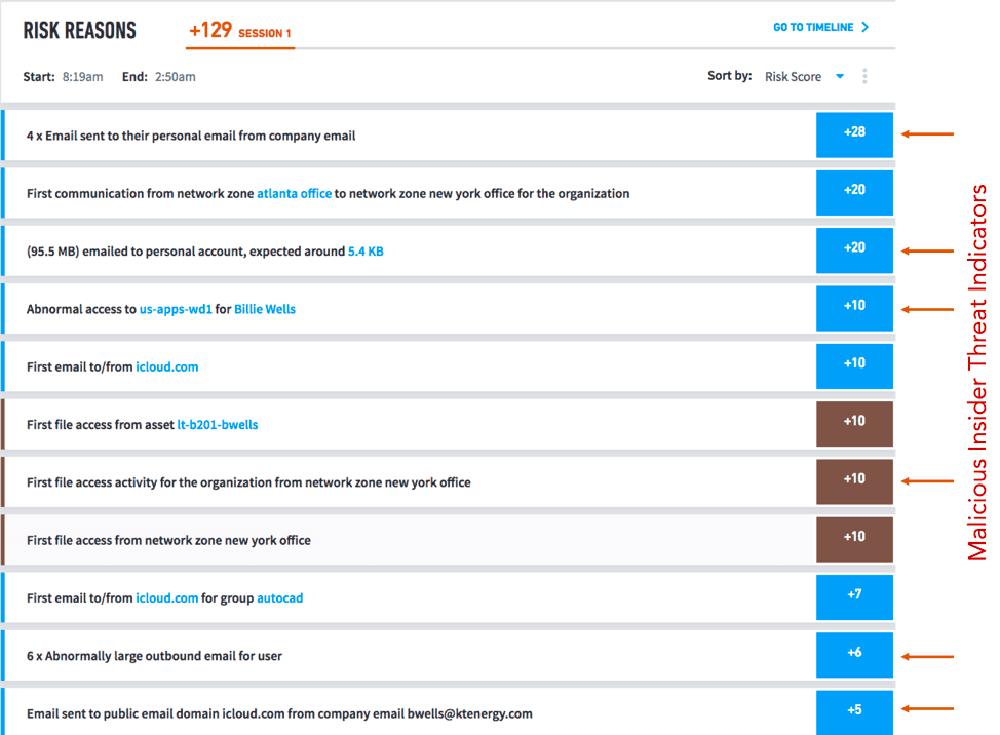

In Figure 2, one user has a risk score of 129 because they’ve sent a large number of emails to their personal account. Located beneath their score, the UEBA timeline provides analysts the related events and malicious actions to expose insider threats (Figure 3).

Clicking on the user’s timeline, analysts can examine all the events to gain complete context of this user’s actions to determine if this is indeed an insider threat. Next, let’s examine how a UEBA timeline can help analysts expose the insider threats.

In this example, we discover a user with a risk score of 129. We can then look at a timeline to check if there are activities typically associated with insider threats. Usually, if you are searching for one activity, there are other related events that lead to a threat—not just one event. The user trend allows analysts to track down all the relevant events that are represented together in one timeline. You can also filter by other activities such as by the file, web, network, etc.

As you can see, one activity alone doesn’t amount to an insider threat. If the user uploads an abnormally large email to a personal account, this alone wouldn’t rise to a level of concern. But when combined with other abnormal behaviors, this activity series is flagged as suspicious and risky. Now, if we look at all the risk reasons for this user, you will see that there are many behavior artifacts that result in an insider threat.

Figure 4 shows data exfiltration (sending corporate data to personal accounts) coupled with abnormal access to other systems (lateral movement). This exposes a threat pattern in action.

Exabeam provides numerous models to thoroughly profile user behavior so you can be sure when a user/asset is flagged as risky, it should be investigated.

Based on abnormal actions surfaced by Exabeam, analysts can easily put a watch on the user, ceasing access if they exhibit additional anomalous behavior. Exabeam provides ample evidence to confront suspected users, negating any fear of false accusations. No tool can provide a 100% guarantee that you won’t be the victim of an insider threat, but Exabeam enables investigators and Security Operations analysts to detect, investigate, and remediate insider threats before the rogue insider knows what hit them.

More insider threat scenarios are available in the Exabeam practitioner training series:

- Exabeam Practitioner Training: Insider Threat Investigations

- Security Operations Center Webinar – How to Find Malicious Insiders: Tackling Insider Threats Using Behavioral Indicators

Learn more about Insider Threats

Take a look at these articles:

- Insider Threats: How to Stop the Most Common and Damaging Security Risk You Face

- Fighting Insider Threats with Data Science

- Crypto Mining: A Potential Insider Threat Hidden In Your Network

Similar Posts

Recent Posts

Stay Informed

Subscribe today and we'll send our latest blog posts right to your inbox, so you can stay ahead of the cybercriminals and defend your organization.

See a world-class SIEM solution in action

Most reported breaches involved lost or stolen credentials. How can you keep pace?

Exabeam delivers SOC teams industry-leading analytics, patented anomaly detection, and Smart Timelines to help teams pinpoint the actions that lead to exploits.

Whether you need a SIEM replacement, a legacy SIEM modernization with XDR, Exabeam offers advanced, modular, and cloud-delivered TDIR.

Get a demo today!