-

- Home

>

-

- Blog

>

-

- InfoSec Trends

What You Don’t Know Can Hurt You: How to Use AI Responsibly

- Jan 24, 2024

- Steve Wilson

- 3 minutes to read

Table of Contents

The mission of the security operations center (SOC) is to protect the organization’s data, as well as the data and privacy of users. To do that, it requires tools, systems, and solutions that analyze and produce reliable information, remain compliant with data protection and privacy regulations, and don’t behave unpredictably or irregularly.

Can the latest artificial intelligence (AI) tools and applications meet these criteria in their current iterations? Not always, which is why SOCs need to be conscientious as their organizations adopt these technologies, and as they’re increasingly deployed in security operations.

Peeking into the black box

Technologies such as generative AI are powered by large language models (LLMs), which are trained on massive datasets typically scraped from across the web. In fact, these datasets are so gigantic that it’s challenging to know what data the model has ingested, nor is it possible to track what happens to data that gets into the model.

Therefore, you might compare this type of AI to a black box — or perhaps a black hole, since once data goes into the model, there’s no getting it back out.

Data scientists are already making great progress in delimiting what models can and can’t ingest, and generative AI models that are purpose-built for specific industries and use cases can help impose guardrails. However, there can still be security implications when working with opaque systems and processes. Security teams need to be aware of these when utilizing and monitoring AI, as well as vetting the controls used by potential solutions vendors.

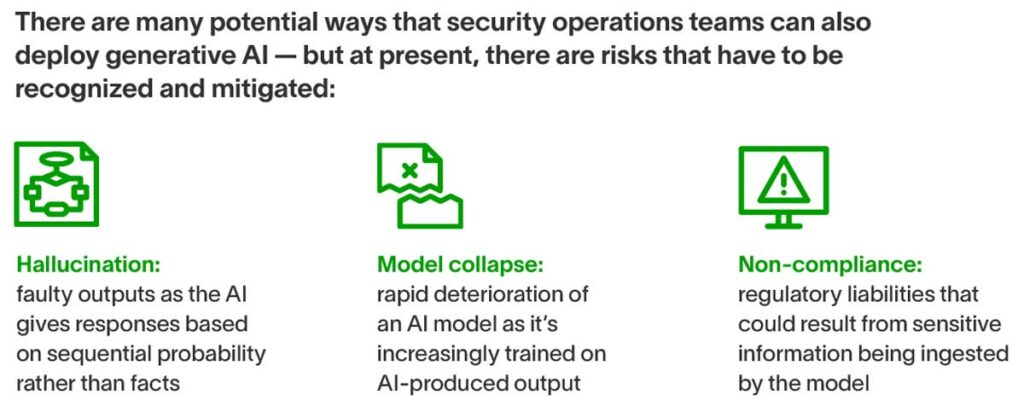

One risk is data leakage, in which sensitive or proprietary information is absorbed into the model; this could carry regulatory ramifications for organizations. But another risk comes from the fact that when you don’t know what’s gone into the model, you can’t trust what you get out — hence the well-documented challenges of AI hallucination.

How hallucinations happen

When you’re sending a text message, you may have noticed that your smartphone constantly suggests the next word you might be looking for. That may be a relatively rudimentary application of machine learning (ML), but it’s not entirely different from what’s happening when you use an application like ChatGPT.

As you watch generative AI automatically produce text, it’s not consulting some vast repository of information to provide you with an objective, verifiable truth; instead, it’s predicting the next most likely word in the context of your query. Therefore, it’s liable to go on strange tangents in which the AI confidently and authoritatively yields nonsense, known as hallucination.

For lay people, it may be amusing at best and inconvenient at worst. But for security analysts, who must make quick decisions based on accurate insights, it’s imperative that any generative AI tools they’re deploying are protected against this risk, and flag when they have insufficient information rather than making it up.

What is model collapse?

Another cause for concern is model collapse, which is the tendency for the quality of a generative AI model’s outputs to deteriorate surprisingly quickly, as AI-generated content is increasingly added to the model.

It goes back to the aforementioned tendency of generative AI to deliver outputs based on probabilistic likelihood instead of genuine truth. AI-generated materials quickly gloss over and lose minority characteristics present in the data, which means that as these materials are incorporated into models, they become less and less representative of reality.

Are there transparent models?

Traditional ML typically requires data scientists to build and train models for specific use cases, meaning they tend to be accountable for what goes into the model and what comes out the other side. Unsurprisingly, ML and its more advanced offshoot, deep learning, has driven the most reliable AI solutions for the cybersecurity space and, for years, has been utilized to analyze immense stores of information and identify patterns.

At Exabeam, our AI-driven Security Operations Platform uses the AI in ML to enable everything from assigning dynamic risk scores to user and entity behavior analytics (UEBA), which understands an organization’s baseline of normal activity to flag deviations effectively. AI capabilities like these remain essential even as generative AI uncovers new ways to augment defenses — and it remains especially critical as generative AI is used to augment attacks. Download the CISO’s Guide to the AI Opportunity in Security Operations for a fuller examination of these shifts.

Read our white paper: CISO’s Guide to the AI Opportunity in Security Operations. This guide is your key to understanding the opportunity AI presents for security operations. In it, we provide:

- Clear AI definitions: We break down different types of AI technologies currently relevant to security operations.

- Positive and negative implications: Learn how AI can impact the SOC, including threat detection, investigation, and response (TDIR).

- Foundational systems and solutions: Gain insights into the technologies laying the groundwork for AI-augmented security operations.

Steve Wilson

Chief AI and Product Officer | Exabeam | Steve Wilson is Chief AI and Product Officer at Exabeam. Wilson leads product strategy, product management, product marketing, and research at Exabeam. He is a leader and innovator in AI, cybersecurity, and cloud computing, with over 20 years of experience leading high-performance teams to build mission-critical enterprise software and high-leverage platforms. Before joining Exabeam, he served as CPO at Contrast Security leading all aspects of product development, including strategy, product management, product marketing, product design, and engineering. Wilson has a proven track record of driving product transformation from on-premises legacy software to subscription-based SaaS business models including at Citrix, accounting for over $1 billion in ARR. He also has experience building software platforms at multi-billion dollar technology companies including Oracle and Sun Microsystems.

More posts by Steve WilsonLearn More About Exabeam

Learn about the Exabeam platform and expand your knowledge of information security with our collection of white papers, podcasts, webinars, and more.