Insider Threat Explainers:

Fighting Malicious Insider Threats with Data Science

One of the key benefits of a Security Information and Event Management (SIEM) platform with User and Entity Behavior Analytics (UEBA) is the ability to solve security use cases without needing to be a data scientist. The platform masks the underlying complexity of data science so that Security Operations Center (SOC) staff can focus on keeping the enterprise safe from attacks. But if you’ve wondered what exactly is going on under the hood, this article provides a high-level overview of how Exabeam Security Management Platform (SMP) uses data science to address one of the most important and elusive use cases: insider threat detection.

Insiders are people who are trusted by the organization — employees or third parties, like contractors. If they sabotage business operations or steal intellectual property or sensitive data, the financial, regulatory and reputational repercussions can bring huge fallout. The big problem for SOCs is that insiders are authorized to use IT resources. Conventional security tools using legacy correlation rules offer little detection power to distinguish when someone’s apparently authorized actions have malicious intent.

The inherent limitations of static correlation rules have shifted IT security and management solutions toward machine learning (ML). This approach identifies malicious insider activity by leveraging the enormous amount of available operational and security log data in conjunction with data enrichment. The security industry calls this data-focused approach User and Entity Behavior Analytics (UEBA). Let’s look at some aspects of how data science is used to address the malicious insider threat use case.

Use of statistical analysis for anomaly detection

For the malicious insider threat use case, Exabeam employs an unsupervised learning method to profile a user’s normal behavior in order to alert for deviations. This technique is used because the volume of data associated with insider threats is low, and traditional supervised ML requires much larger amounts of data for accurate results. Unsupervised learning based on statistics and probability analysis is the primary technical means of implementing UEBA.

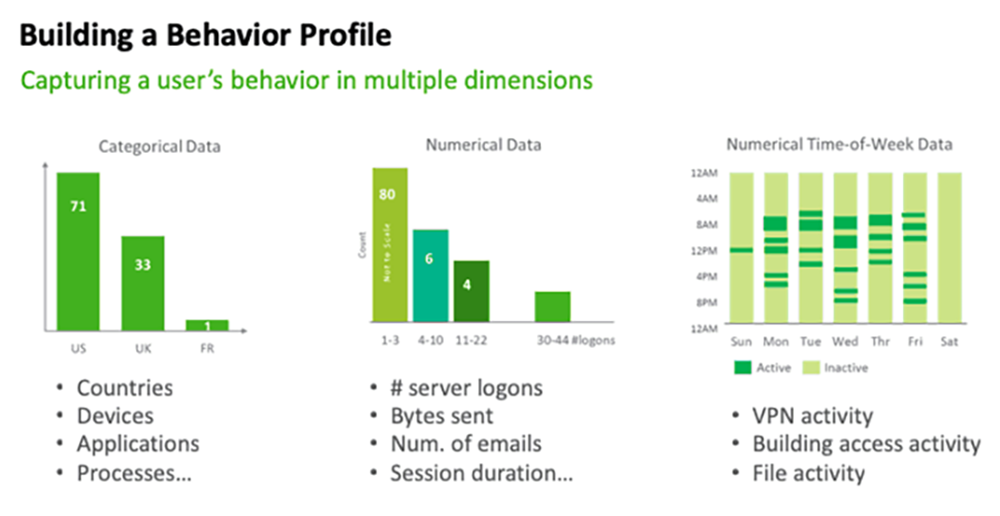

The use of statistical analysis helps our UEBA solution to profile the normalcy of events. Examples of three types of profiled data are shown in Fig. 1 as histograms. High probability events, determined from profiled histograms or clustering analysis, are deemed benign. Outlying events with low probability are anomalous and correlate with security events. It’s like a SOC analyst manually sifting through tons of log data and trying to find meaning, but the machine can analyze more data automatically, more quickly, and with high accuracy. Statistics and probability analysis are the basis for UEBA’s identification of normal behavior – and deviations that reveal abnormal and potentially malicious behavior by insiders.

Contextual information derivation for network intelligence

Context information consists of labeled attributes and properties of network users and entities. This Information is vital to help calibrate risks of anomalous events, as well as for the triage and review of alerts.

Example context derivation use cases include: service account estimation, account resolution, and personal email identification. Service accounts are used for asset and rights administration, so their higher level of privileges makes them valuable for a malicious insider; yet service accounts are infrequently tracked in large IT environments. Service accounts and general staff user accounts show different behaviors. Data science can find unknown service accounts by analyzing textual data in active directory (AD) or classify accounts based on behavioral cues. In this manner, data science helps shine visibility on this potentially risky vector used by malicious insiders.

Account resolution provides a security view on users with multiple accounts. The individual account activity streams may look normal to traditional security tools, but merged activities from the multiple accounts belonging to the same user may reveal interesting anomalies. This frequently occurs when an insider logs into their regular account and then jumpsto another account with unconnected and unusual behavior. Account resolution enabled by data science is able to look at activity data and determine if two accounts are, in fact, of one user.

Data exfiltration via personal email accounts is an attack vector frequently employed by malicious insiders. For example, a user links to a personal web email account and sends an unusually large file attachment. Based on historical behavior data, it is possible to connect an external email account to an internal user, thus providing future visibility in data exfiltration from a malicious insider.

Meta learning for false positive control

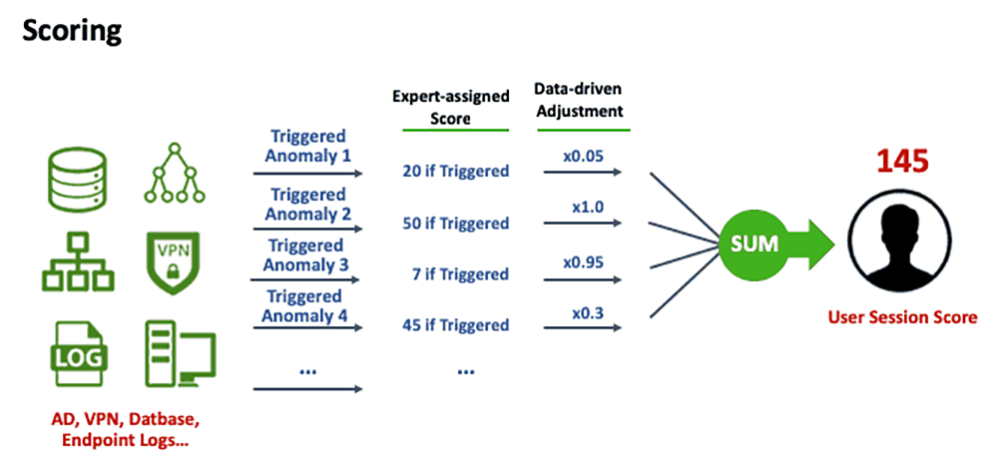

False positives waste time and cause alert fatigue for security analysts who have little time to spare. Some indicators are more accurate than others, and those with weaker statistical strength are prone to false positive alerts. Meta learning with data science allows the UEBA system to automatically learn from its own behavior to improve its detection performance. One way is to help adjust the initial expert assigned scores via data-driven adjustment, as illustrated in Figure 2. The scoring adjustment examines alert triggers and frequencies across the population and within a user’s history.

Another false positive control is to intelligently suppress some noisy alerts. Meta learning from this history helps the UEBA system to automatically build a recommender system that predicts whether the user’s first access to the asset could have been predicted. If so, the corresponding alert is suppressed with no scoring contribution to minimize false positive noise.

Conclusion

The unique challenges of insider threat detection cannot be addressed by the conventional means of correlation rules. The statistical and probability-based approaches in UEBA offer the most promising solutions for detecting such threats; however, no algorithm is a silver bullet. Multi-pronged, data-centric approaches are required to find insider threats such as the aspects described above. The recent use of data science to analyze existing data available in the enterprise SIEM creates new possibilities for effective insider threat detection. If you would like to take a deeper dive into this topic, check out my original article in Cybersecurity: A Peer-Reviewed Journal, Volume 2 / Number 3 / Winter 2018–19, pp. 211-218(8) or download it here.

Learn more about Insider Threats

Have a look at these articles:

- What Is an Insider Threat? Understand the Problem and Discover 4 Defensive Strategies

- Insider Threat Indicators: Finding the Enemy Within

- How to Find Malicious Insiders: Tackling Insider Threats Using Behavioral Indicators

- Crypto Mining: A Potential Insider Threat Hidden In Your Network